Understanding LLMs Through the Lens of Customer Support

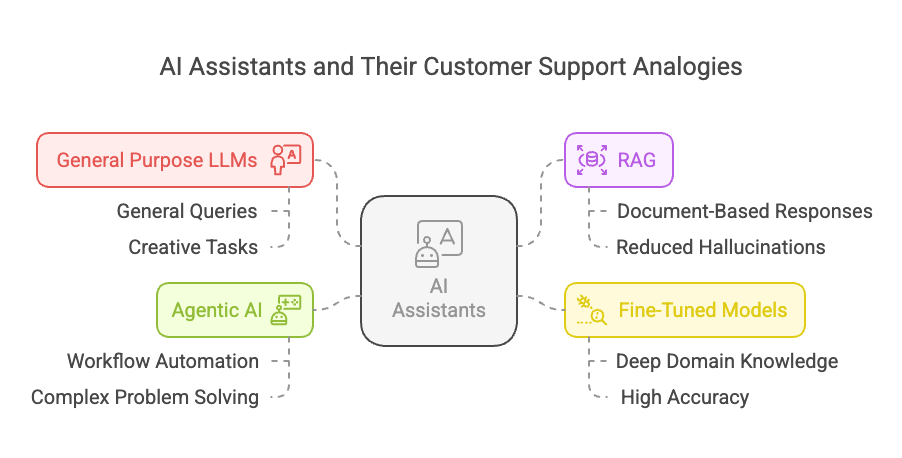

Have you been inundated with terms like RAG, fine-tuning, and agentic AI, and wondered what they actually mean?These buzzwords get tossed around a lot, but the concepts behind them aren't as complicated as they might seem. In fact, there's a surprisingly simple way to understand different AI approaches—they mirror different levels of expertise in customer support teams.

Why Customer Support Makes a Perfect Analogy

Think about it. Not every customer question requires the same level of knowledge or authority to answer, right? Some questions are straightforward enough for any team member to handle. Others require specialized expertise or even the authority to make decisions without supervisor approval.

🤖 AI works the same way. Different AI techniques represent different levels of expertise, specialization, and autonomy—each with corresponding differences in cost and capability.

Let's explore each approach through the lens of customer support roles.

🧠 1. General Purpose LLMs: The New Hire with General Training

Imagine a support rep who just finished their basic training from school and college. They’ve learned about general concepts and have broad knowledge and have some experience dealing with customer service requests as a customer, but they don’t know your company’s specific policies very well yet. When faced with a tricky question, they might make educated guesses rather than saying, "I don't know."

💡 This is what basic Large Language Models (LLMs) like ChatGPT or Claude are like when used straight out of the box.

✅ What They're Good At:

Handling a wide range of general questions

Creative tasks like drafting and brainstorming

Conversations that don't require specialized knowledge

❌ Where They Struggle:

They sometimes "hallucinate"—confidently stating incorrect information

They only know what they were trained on, not your specific data

They can't access real-time information unless provided in the prompt

📌 When to Use Them: For non-critical applications where general knowledge is sufficient and some inaccuracy is tolerable. Great for drafting, ideation, and handling common questions.

💰 Cost Factor: Typically the least expensive option, making them a good starting point for many projects.

📚 2. Retrieval-Augmented Generation (RAG): The Rep with Access to the Knowledge Base

Now imagine a support rep who may not know everything off the top of their head, but they know exactly where to look for answers. Before responding to a customer, they quickly check the company knowledge base to ensure their information is accurate and up to date.

💡 This is RAG in a nutshell. It combines the general abilities of an LLM with the ability to search through specific documents for relevant information before generating a response.

✅ What It's Good At:

Providing responses grounded in your specific documentation

Staying current when your information changes

Reducing hallucinations dramatically

Handling specialized questions without needing to be retrained

❌ Where It Struggles:

Building and maintaining the knowledge base requires effort

Search quality impacts response quality

📌 When to Use It: When you need accurate responses based on specific documentation, especially when that information changes frequently. Perfect for customer support, internal knowledge bases, and compliance-heavy industries.

💰 Cost Factor: Moderate investment. Requires infrastructure for document storage and retrieval but is often less expensive than fine-tuning.

🎓 3. Fine-Tuned Models: The Specialized Expert

Some customer issues require deep domain expertise. Think of a support specialist who’s spent years becoming an expert in one specific area of your product or service. They don’t need to look things up because the specialized knowledge has become second nature to them.

💡 This is what fine-tuning accomplishes. It takes a general LLM and trains it further on focused, specialized data until that knowledge becomes deeply integrated into the model itself.

✅ What They're Good At:

Deep expertise in specific domains

Higher accuracy for specialized tasks

Less reliance on external knowledge sources

More consistent outputs in their area of specialization

❌ Where They Struggle:

Expensive to train and maintain

Need retraining when information changes

Might perform worse on general topics outside their specialty

📌 When to Use Them: For specialized applications where deep domain expertise is critical, and the knowledge domain is relatively stable. They shine in fields like healthcare, finance, legal, and technical industries.

💰 Cost Factor: Higher investment. Requires specialized datasets and more computational resources than RAG or general LLMs.

🤖 4. Agentic AI: The Autonomous Problem-Solver

Finally, imagine a senior support specialist who not only answers questions but has the authority to take actions on behalf of the customer. They don’t just explain how to process a refund—they actually process it.

💡 That’s what agentic AI does. These systems don’t just respond to queries; they take actions to complete tasks autonomously.

✅ What They're Good At:

Completing entire workflows without human intervention

Solving complex, multi-step problems

Interacting with multiple systems to accomplish goals

Making appropriate decisions within defined parameters

❌ Where They Struggle:

Require careful design to avoid mistakes

Need robust monitoring and safeguards

📌 When to Use Them: For automating complex processes where reducing human intervention creates significant value.

💰 Cost Factor: The most expensive approach, but offering the highest ROI for the right use cases.

📝 Cheat Sheet

Final Thoughts: What's Your Experience?

Which AI approach have you explored in your projects? Let’s discuss in the comments! 🚀